Construction kits for evolving life

(Including evolving minds and mathematical abilities.)

The scientific/metaphysical explanatory role of construction kits:

Fundamental and derived kits,concrete, abstract and hybrid kits,

meta-construction kits.

(All with deep mathematical properties,

creating products with mathematical properties.)

Aaron Sloman

School of Computer Science, University of Birmingham, UK

http://www.cs.bham.ac.uk/~axs

------------------------------------------

The previous title: "Construction kits for biological evolution"

Was used for my chapter in the Springer 2017 book

The Incomputable: Journeys Beyond the Turing Barrier

(see below).

This document is work in progress, extending that chapter.

------------------------------------------

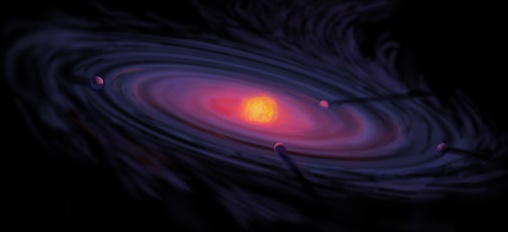

This is part of: The Turing-inspired Meta-Morphogenesis (M-M) project,

Or Self-Informing Universe (SIU) project, which asks:

How can a cloud of dust give birth to a planet

full of living things as diverse as life on Earth?

(Including mathematicians like Archimedes, Euclid, Zeno, and many others.)

What features of the physical universe make that possible?

[NASA artist's impression of a protoplanetary disk, from WikiMedia]

This document is available in two formats: HTML and PDF

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/construction-kits.html

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/construction-kits.pdf

Jump to CONTENTS

Link to the Metamorphosis web site added below.

Apology: 15 April 2024:

Recent additions have produced formatting inconsistencies. I may not find time

to sort this out.

The metamorphosis document extends these ideas

Link added 15 Apr 2024

https://www.cs.bham.ac.uk/research/projects/cogaff/misc/metamorphosis.html

We need to change teaching and research in core philosophy of science so that instead of focusing on the nature of concepts, laws, technology, and reasoning processes related to producing, predicting and explaining configuration changes in already known classes of spaces (e.g. involving only structures and processes studied by physicists) philosophers also learn to think about systems that can repeatedly generate new types of possibility (including new possible structures and new possible processes) and repeatedly produce mechanisms (construction kits) that produce novel structures, mechanisms, processes, and construction kits -- adding new layers of complexity (including repeatedly adding new types of construction kit for building construction kits). This is a major theme of the metamorphosis web site linked above. Mike Levin's online work (and related presentations on biological mechanisms) referenced below should be included in teaching materials for courses on philosophy/methodology of science, although he has not yet, as far as I know, discussed all of those problems,

Mike Levin's work

Added July 2021

The work of

Mike Levin at Tufts University

includes some major extensions to the ideas presented here, by drawing attention

to some of the enormous variety of processes, mechanisms and ontologies involved

in biological development and evolution, for example in this video presentation:

https://www.youtube.com/watch?v=L43-XE1uwWc.

(The title "Robot cancer" does not do justice to the breadth and depth of the

presentation.)

Additional information about his work and collaborative activities is here:

https://www.liebertpub.com/doi/full/10.1089/soro.2022.0142

However he seems not to have noticed several problems discussed in this and

other web sites I have produced, especially the metamorphosis website mentioned

above, including the problem of explaining

how insect metamorphosis is controlled.

NEWS JANUARY 2020

There seems to be significant overlap between the ideas of this project and the

ideas presented by Neil Gershenfeld here:

https://www.edge.org/conversation/neil_gershenfeld-morphogenesis-for-the-design-of-design

'Morphogenesis for the Design of Design', Edge Talk, 2019:

raising important issues, from an engineering point of view.

This is part of the Meta-Morphogenesis (M-M) project.

Additional topics are included or linked at the main M-M web site:

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/meta-morphogenesis.htmlThe Beginning: Two book chapters

Ideas about Meta-Morphogenesis in Biological evolution were first presented in a paper written in 2011, as my invited contribution [Sloman 2013b], to part 4 of [Cooper and van Leeuwen 2013], commenting on Turing's paper on The Chemical basis of Morphogenesis [Turing 1952]. That paper introduced the Meta-Morphogenesis project and the conjecture that Turing would have worked on such a project if he had not died two years after publishing his morphogenesis paper.Since then, published and unpublished papers on the Birmingham CogAff website have extended the ideas in several directions, one of the most important being the theory of evolved construction kits, presented below, but still in its infancy. Another important theme is the creativity of evolution. Not only are the biological mechanisms of evolution enormously creative, they are also indirectly responsible for all the other types of creativity on this planet, including human creativity.

Some of the ideas concerning creativity of evolution are in this incomplete paper on creativity:

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/creativity.html

(work in progress). See also [Sloman 2012].

The main idea behind the label "Meta-Morphogenesis" was that some of the changes produced by the mechanisms of evolution (an important type of morphogenesis) extend the mechanisms of evolution (hence the 'Meta-' in 'Meta-morphogenesis').

The most significant products of evolution able to feed back into mechanisms of evolution include both many physical/chemical products of evolution and also evolved forms of information processing.

Later extensions to this document proposed that processes of individual development also extend the mechanisms of development. So Meta-Morphogenesis happens both on evolutionary time-scales (the original idea) but also during processes of individual development. During the months after November 2020, this became the major focus of new ideas in the Meta-Morphogenesis project. The ideas were presented both in documents on this web site and in invited talks (using Zoom) some of which were recorded and made available online. It was during this period that I became aware that the work of Mike Levin was directly relevant.

Most of the advances in studies of evolution have been concerned with the physical and chemical structures, along with the physical forms of organisms, the environments with which they have coped, and aspects of their behavioural competences inferred from observations of existing species, and speculative inferences from fossil records and archeological evidence.

But the ancient forms of information processing do not leave fossil records, although some of their products do. And I do not know of any systematic attempt to use varied forms of direct and indirect evidence to infer ancient forms and mechanisms of biological information processing.

In contrast, inspired by Turing's work, the main aim of the M-M project, from the beginning, was to identify changes in types of information processing produced by evolution, including new forms of information-processing that directly or indirectly altered the scope of biological evolution (hence the "Meta-".

Two important examples of such changes were the development of sexual reproduction, and later on the development of mate-selection mechanisms. However there must have been many more changes concerned with uses of information of many kinds for many purposes, including collaborative uses of information, e.g. in swarming and trail-following behaviours.

After I had contributed four papers to the Turing Centenary Volume, including the paper in Part IV introducing the Meta-Morphogenesis project without mentioning construction kits, Barry Cooper and Mariya Soskova invited me to give a talk at a Workshop on "The Incomputable", held at the Kavli Centre in June 2012, at which I presented some of the ideas about the M-M project. They later invited me to contribute a chapter for a follow-up book: The focus on construction kits came out of that invitation:

"This new book will aim to address the theme of incomputability with a wide readership in mind, filling a real gap in the literature. Our choice of potential contributors prioritises leading researchers who can write, and are able to contribute something for both experts and non-experts. We see the book as uniquely focusing on this neglected aspect of the Turing legacy, and sharing more generally some appreciation of the beauty and fundamental importance of contemporary research at the computability/incomputable interface."Because of my special interest (since my thesis defending Kantian philosophy of mathematics 1962) in the nature of ancient mathematical forms of reasoning and discovery (e.g. the amazing deep ancient discoveries in geometry and topology reported by Archimedes, Euclid, Zeno and others) with features that had so far resisted replication or modelling in AI theorem provers, and which did not seem to be explicable in terms of current theories about brain mechanisms, I started writing a paper presenting examples of types of mathematical reasoning and discovery that were not yet replicated in AI, intending to propose a strategy for investigating whether the gaps were simply due to limitations in current ideas about automated reasoning, or an indication of some more fundamental, previously unnoticed, problem about what current AI models of computation can achieve.

My ideas about possible gaps were different from the ideas of AI critics such as Searle (1981) and Penrose (1989) and (1994), both of whom I had criticised in Sloman(1992) and other papers.

So, in the spirit of the M-M project, I proposed collecting examples of evolutionary transitions in biological information processing that might eventually have led to evolution of ancient mathematical minds. The idea that construction kits would have to play a role was triggered by a talk given by Birmingham Biologist Juliet Coates on evolution of biological toolkits that were necessary for the development of the earliest plants, e.g. enabling them to produce structures that permitted vertical growth upwards from a supporting medium. (See [Coates, et al. 2014].)

This made me realise that there was a very general notion missing from the Darwin/Wallace theory of evolution in all the versions that I had encountered, and the plant toolkit was a special case.

The missing notion was that evolution did not merely produce all the different types of organism on the planet, with all the different types of body-part with different functions, and different observed behaviours. Evolution must also have produced many different sorts of construction kit, including construction kits for producing and extending information processing mechanisms, some of which will have required new body parts, in the same way as human engineered information processing mechanisms use different sorts of physical mechanism, while other construction kits may have produced more abstract mechanisms, in something like the way human information engineering has produced both new programming languages, new programs, new operating systems, new software tools, including new virtual machines, for use in building, testing and maintaining new packages, and so on.

NB. None of this implies that the biological information processing systems were Turing machines, or were capable of being implemented on Turing machines, or even that all the forms of information processing were digital, using only discrete physical structures and processes. Human engineers have used both digital and analog (continuously varying) mechanisms for centuries, and I see no reason for ruling out a combination. Turing's morphogenesis paper indicates that he was interested in forms of continuous variation that could produce both continuous and discrete changes, suggesting that he was more open minded than some of his admirers.

These more abstract construction kits and their products are as important for the M-M project as the construction kits for building and assembling new physical/chemical components. This is something Schrödinger appreciated in [1944], before the development of hardware and software computing technologies had begun.

The whole process of creating designs for organisms, parts of organisms, and construction kits for instantiating those designs, must have started with a "Fundamental" construction kit (FCK) with the potential to generate all the other construction kits. That FCK must have been provided by fundamental physical features of the universe, long before there was any life on this planet (or anywhere else).

This implied that the M-M theory had to be extended to accommodate construction kits for generating construction kits -- an idea that should be familiar to anyone with experience of designing and building complex software systems. (My own experience included contributions to Poplog, and the SimAgent toolkit, built on Poplog [Sloman 1996b].)

In order to identify missing components in our current toolkits for building information processing systems I began collecting examples of types of mathematical discovery that did not fit current AI reasoning systems, and trying to categorise them in different types and subtypes.

That process converted my contribution to the Incomputable book into a first draft investigation of types of construction kit required by biological evolution. I hoped the project would shed new light on biological construction kits for information processing mechanisms that might have played a role in the evolution of mathematical discovery and reasoning mechanisms that were already producing rich results several thousand years ago, long before the invention/discovery of modern logic, algebra, set theory, formal systems, proof theory, etc.

An initial progress report, [Sloman 2017a], entitled "Construction Kits for Biological Evolution", presenting ideas developed between 2014 and 2016, was eventually published by Springer in 2017, in The Incomputable,(Cooper and Soskova 2017), although, sadly, Barry Cooper whose encouragement was crucial to this project, did not live to see it.

(The book chapter was initially frozen in Dec 2015, then modified in September and December 2016, especially undoing many erroneous changes by Springer copy editors. (See my rant against copy editors here.)

This paper continues the work on construction-kits, perhaps the single most important part of the M-M project, for the time being. Several parts of the book chapter have been extended and partly rewritten. This version is still changing, and the externally visible online version will be changed from time to time. Please store links, not copies, as copies will become out of date.

Evolved Compositionality (Added 30 Nov 2018, after SYCO-1 Workshop. Modified 12 Feb 2019)

Most philosophies of learning and science assume there's a fixed type of world to be understood by a learner or a community of scientists. Biological evolution, however, in collaboration with its products, is metaphysically creative, and constantly extends the mathematical diversity and complexity of the world to be understood, and constantly extends the types of learners with new powers. Its more advanced (recently evolved) learners have multi-stage genomes that extend powers within a learner in different ways at different stages (during epigenesis), by extending the powers produced by the earlier stages and their environments, which can differ across generations and across geographical locations. This process constantly adds new compositional powers (new types of compositionality) making new, more complex step-changes available to natural selection. Most will probably be fatal, but enough are viable for to have a major impact on the generative power of biological evolution. (This all needs to be documented in far more detail -- unless someone has already done that.)So evolution extends genomes, which get changed by evolution, and new multi-layered genomes extend the ways in which individuals change themselves as they as they get changed by the environment.

Moreover individuals with new genomes can change the environment in new ways, producing new types of selection pressure.

Sexual reproduction can combine different evolved structures with different histories making step-changes to the structural variety and potential in the gene-pool.

The contrast between the powers of genetic algorithms (GAs) and Genetic programming (GP) illustrates some of the differences discussed here. [The GA/GP contrast is controversial, however, and there are likely to be differences between the impact in computer-based experiments on artificial evolution and the impact of the mechanisms described here on biological evolution. For more information and references, see https://en.wikipedia.org/wiki/Genetic_programming]

Together, all these processes combined with non biological external influences can use the compositionality of the genome to produce step changes at different levels of abstraction into new regions of the space of possibilities. This continually produces not only new designs for individuals, but also extends the space of available reproductive and developmental trajectories, including continually adding new, more abstract and general levels of compositionality, allowing previous products of evolution to be combined in new ways. Hence the label: The Meta-Configured genome -- explained more fully below.

New capabilities can combine with new forms of motivation to produce new behavioural capabilities able to contribute to achieve new types of goal. Some of these processes also requires new forms of motivation during development, for example new forms of play, and in some cases play fighting.

Eventually these processes produced minds able to make deep mathematical discoveries and to use the results in increasingly sophisticated scientific theories and engineering applications. But we still don't know how to replicate those powers in human-designed machines, despite all the advances in AI.

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/architecture-based-motivation.html

A more detailed discussion of the role of compositionality in evolution is in:

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/sloman-compositionality.html

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/sloman-compositionality.pdf

[Contrast: "Ontogeny recapitulates phylogeny" (Haeckel), a much simpler idea.]

Updated:

15 Apr 2024

Metamorphosis Website linked

below.

29 Nov 2018 (Compositionality); 13 Jan

2019; 12 Feb 2019

14 Jan 2018 (Memo functions and genome size); 1 Jun 2018; 11 Nov

2018;

May 2017; 3 Oct 2017(Minor corrections);

10 Oct 2016 (including correcting "kit's" to "kits"!); 10 Feb 2017;

3 Feb 2016; 10 Feb 2016; 20 Feb 2016; 23 Feb 2016; 19 Mar 2016;

30 Jun 2016; 27 Dec 2016

Original version: December 2014

Jump to CONTENTS

Other versions and related papers.

This document is occasionally copied to slideshare.net, making it available in flash format. That version will not necessarily be updated whenever the html/pdf versions are. (Not all the links work in the slideshare version.) Slideshare version last updated 10 Oct 2016.See http://www.slideshare.net/asloman/construction-kits

(Note: Slideshare now does not allow updating, a dreadful change of policy.)

Closely related online papers:

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/turing-intuition.html

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/ijcai-2017-cog.html

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/vm-functionalism.html

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/maths-multiple-foundations.html

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/explaining-possibility.html

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/creativity.html

http://www.cs.bham.ac.uk/research/projects/cogaff/

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/entropy-evolution.html

Ongoing work on fundamental and derived construction kits is summarised below.

(Many also have PDF versions)

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/meta-morphogenesis.html

A partial index of papers and discussion notes on this web site is in

Overview of the Meta-Morphogenesis project, and some of its history.

Alan Turing's 1938 thoughts on intuition vs ingenuity in mathematical reasoning

Did he unwittingly re-discover key aspects of Kant's philosophy of mathematics,

illustrated in

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/kant-maths.html

Why can't (current) machines reason like Euclid or even human toddlers?

(And many other intelligent animals)

Virtual Machine Functionalism (VMF)

(The only form of functionalism worth taking seriously

in Philosophy of Mind and theories of Consciousness)

Multiple Foundations For Mathematics

Neo-Kantian (epistemic/cognitive) foundations,

Mathematical foundations, Biological/evolutionary foundations

Cosmological/physical/chemical foundations,

Metaphysical/Ontological foundations

Multi-layered foundations,

others ???

Using construction kits to explain possibilities

(Defending the scientific role for explanations of possibilities, not just laws.)

The Creative Universe

(Early draft, begun March 2016)

The Birmingham Cognition and Affect project

(begun 1991, extending ideas

developed earlier while I was at Sussex University).

Tentative non-mathematical thoughts on entropy, evolution, and construction-kits

(Entropy, Evolution and Lionel Penrose's Droguli)

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/AREADME.html

A separate "background" document on Evelyn Fox Keller (1 Mar 2015)

A few notes on Evelyn Fox Keller's papers on

Organisms, Machines, and Thunderstorms: A History of Self-Organization, in

Historical Studies in the Natural Sciences,

Vol. 38, No. 1 (Winter 2008), pp. 45-75 and Vol. 39, No. 1 (Winter 2009), pp. 1-31

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/keller-org.html

NOTE 16 Jan 2018

Related work in Biosemiotics

Alexei Sharov drew my attention to deep, closely related work by the Biosemiotics research community, e.g.:

http://www.biosemiotics.org/biosemiotics-introduction/

https://en.wikipedia.org/wiki/Biosemiotics https://en.wikipedia.org/wiki/Zoosemiotics

I shall later try to write something about the connections, and will add links on this site.

Contents

BACKGROUND NOTES (above)Evolved Compositionality (Above)

A separate "background" document on Evelyn Fox Keller (Above)

ABSTRACT: The need for construction kits (Below)

Philosophical background: What is science? Beyond Popper and Lakatos

Note on "Making Possible":

1 A Brief History of Construction-kits

Beyond supervenience to richer forms of support

A corollary: a tree/network of evolved construction kits

The role(s) of information in life and its evolution

2 Fundamental and Derived Construction Kits (FCK, DCKs)

SMBC comic-strip comment on "fundamentality"

2.1 Combinatorics of construction processes

Abstraction via parametrization

New tools, including virtual machinery

NOTE on vision (Added 3 Sep 2017)

Combinatorics of biological constructions

Comparison with use of Memo-functions in computing

Storing solutions vs storing information about solutions

Negative memoization

NOTE: making faster or easier vs making possible:

Figure FCK: The Fundamental Construction Kit

Figure DCK: Derived Construction Kits

2.2 Construction Kit Ontologies

2.3 Construction kits built during development (epigenesis)

Figure EVO-DEVO

2.4 The variety of biological construction kits

NOTE: Refactoring of designs (Added 9 Oct 2016)

2.5 Increasingly varied mathematical structures

IJCAI 2017 presentation (Added 29 Aug 2017)

2.6 Thermodynamic issues

2.7 Scaffolding in construction kits

2.8 Biological construction kits

2.9 Cognition echoes reality

(INCOMPLETE DRAFT. Added 13 Apr 2016)

2.10 Goodwin's "Laws of Form": Evolution as a form-maker and form-user

(DRAFT Added: 18 Apr 2016)

3 Concrete (physical), abstract and hybrid construction kits

3.1 Kits providing external sensors and motors

3.2 Mechanisms for storing, transforming and using information

3.3 Mechanisms for controlling position, motion and timing

3.4 Combining construction kits

3.5 Combining abstract construction kits

4 Construction kits generate possibilities and impossibilities

4.1 Construction kits for making information-users

4.2 Different roles for information

Figure Evol (Evolutionary transitions)

4.3 Motivational mechanisms

5 Mathematics: Some constructions exclude or necessitate others

5.1 Proof-like features of evolution

5.2 Euclid's construction kit

5.3 Mathematical discoveries based on exploring construction kits

5.4 Evolution's (blind) mathematical discoveries

Dana Scott's new route to Euclidean geometry

6 Varieties of Derived Construction Kit

6.1 A new type of research project

6.2 Construction-kits for biological information processing

6.3 Representational blind spots of many scientists

6.4 Representing rewards, preferences, values

7 Computational/Information-processing construction-kits

7.1 Infinite, or potentially infinite, generative power

8 Types and levels of explanation of possibilities

9 Alan Turing's Construction kits

9.1 Beyond Turing machines: chemistry

9.2 Using properties of a construction-kit to explain possibilities

9.3 Bounded and unbounded construction kits

9.4 Towers vs forests (Draft: 2 Feb 2016)

9 Alan Turing's Construction kits

10 Conclusion: Construction kits for Meta-Morphogenesis

11 End Note (Turing's letter to Ashby)

12 Note on Barry Cooper

FOOTNOTES

REFERENCES

Jump to CONTENTS

ABSTRACT: The need for construction kits

This is part of the Turing-inspired Meta-Morphogenesis project, which aims to identify transitions in information processing since the earliest proto-organisms, partly in order to provide new understanding of varieties of biological intelligence, including the mathematical intelligence that produced Euclid's Elements, without which a great deal of human science and engineering would have been impossible. (Explaining evolution of mathematicians is much harder than explaining evolution of consciousness, since being able to make mathematical discoveries requires common forms of consciousness with some additional features!)Evolutionary transitions depend on the availability of "construction kits", including the initial "Fundamental Construction Kit" (FCK) based on physics and chemistry, and "Derived Construction Kits" (DCKs) produced by combinations of physical processes (e.g. lava-flows, geothermal activity) and biological evolution, development, learning and culture. Some are meta-construction kits: construction kits for building new construction kits.

Some construction kits used and in many cases also designed by humans (e.g. Lego, Meccano, plasticine, sand, piles of rocks) are concrete: using physical components and relationships. Others (e.g. grammars, proof systems and programming languages) are abstract: producing abstract entities, e.g. sentences, proofs, and new abstract construction kits. Concrete and abstract construction kits can be combined to form hybrid kits, e.g. games like tennis or croquet, which involve physical objects, players, and rules. There are also meta-construction kits: able to create, modify or combine construction kits. (This list of types of construction kit is provisional, and likely to be extended, including new sub-divisions of some of the types.)

Construction kits are generative: they explain sets of possible construction processes, and possible products, often with mathematical properties and limitations that are mathematical consequences of properties of the kit and its environment (including general properties of space-time).

Evolution and individual development processes can both make new construction kits possible. Study of the FCK and DCKs can lead us to new answers to old questions, e.g. about the nature of mathematics, language, mind, science, and life, exposing deep connections between science and metaphysics, and helping to explain the extraordinary creativity of biological evolution. (Discussed further in a separate document on creativity.)

Products of the construction kits are initially increasingly complex physical structures/mechanisms. Later products include increasingly complex virtual machines.

Philosophers and scientists were mostly ignorant about possibilities for

virtual machinery until computer systems engineering in the

20th Century introduced both new opportunities and new motivations for designing

and building increasingly sophisticated types of virtual machinery. The

majority of scientists and philosophers, and even many computer scientists, are

still ignorant about what has been learnt and its scientific and philosophical

(metaphysical) significance, partly summarised in:

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/vm-functionalism.html

One of the motivations for the Meta-Morphogenesis project is the conjecture that many hard unsolved problems in Artificial Intelligence, philosophy, neuroscience and psychology (including problems that have not yet been noticed?) require us to learn from the sort of evolutionary history discussed here, namely the history of construction kits and their products, especially increasingly complex and sophisticated information processing machines, many of which are, or depend on, virtual machines. The fact that some construction kits can produce running virtual machinery supporting processes that include learning, perceiving, experiencing, wanting, disliking, inventing, supposing, asking, deciding, intending, pleasure, pain, and many more gives them not only scientific but also philosophical/metaphysical significance: they potentially provide answers to old philosophical questions, some of which have been recently rephrased in terms of a notion of grounding (interpreted as "metaphysical causation" by Wilson (2015)] -- a related paper on metaphysical ground of consciousness (and other aspects of life) in evolution is in preparation.)

Some previously unnoticed functions and mechanisms of minds and brains, including the virtual machinery they use, may be exposed by the investigation of origins and unobvious intermediate "layers" in biological information processing systems, based on older construction kits.

Showing how the FCK makes its derivatives possible, including all the processes and products of natural selection, is a challenge for science and philosophy. This is a long-term research programme with a good chance of being progressive in the sense of Imre Lakatos (1980), rather than degenerative.

Later, this may explain how to overcome serious current limitations of AI

(artificial intelligence), robotics, neuroscience and psychology, as well as

philosophy of mind and philosophy of science. In principle, it could draw

attention to previously unnoticed features of the physical universe that are

important aspects of the FCK.

Note:

My ideas have probably been influenced in more ways than I recognise by

Margaret Boden, whose work has linked AI/Cognitive Science to Biology over

several decades, notably in her magnum opus published in 2006

by OUP, Mind As Machine: A history of Cognitive Science (Vols 1-2).

CONTENTS

Philosophical Background: What is science? Beyond Popper and Lakatos

How is it possible for very varied forms of life to evolve from lifeless matter, including a mathematical species able to make the discoveries presented in Euclid's Elements?1 Explaining evolution of mathematical insight is much harder than explaining evolution of consciousness! (Even insects must be conscious of aspects of their surroundings, used in control of motivation and actions).2)-------------------------

1 http://www.gutenberg.org/ebooks/21076

2 I have argued

elsewhere that the concept of "consciousness" labelled by a noun is problematic,

in part because the adjectival forms are more basic than the noun, and the

adjectival forms have types of context-sensitivity that can lead to truth-values

that depend on context in complex ways. Some of the issues are summarised in

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/family-resemblance-vs-polymorphism.html

An outline explanation of the evolutionary possibilities is based on construction kits produced by evolution, starting from a "fundamental" construction kit provided by physics and chemistry. New construction kits make new things (including yet more powerful construction kits) possible.

The need for science to include theories that explain how something is possible has not been widely acknowledged. Explaining how X is possible (e.g. how humans playing chess can produce a certain board configuration) need not provide a basis for predicting when X will be realised, so the theory used cannot be falsified by non-occurrence. Popper, [1934] labelled such theories "non-scientific" - at best metaphysics. His falsifiability criterion has been blindly followed by many scientists who ignore the history of science. E.g. the ancient atomic theory of matter was not falsifiable, but was an early example of a deep scientific theory. Later, Popper shifted his ground, e.g. in [Popper 1978], and expressed great admiration for Darwin's theory of Natural Selection, despite its unfalsifiability.

Lakatos [1980] extended Popper's philosophy of science, showing how to evaluate competing scientific research programmes over time, according to their progress. He offered criteria for distinguishing "progressive" from "degenerating" research programmes, on the basis of their patterns of development, e.g. whether they systematically generate questions that lead to new empirical discoveries, and new applications. It is not clear to me whether he understood that his distinction could also be applied to theories explaining how something is possible.

Chapter 2

of

[Sloman 1978]3

modified the ideas of Popper and Lakatos to accommodate scientific theories

about what is possible, e.g. types of plant, types of animal,

types of reproduction,

types of consciousness, types of thinking, types of learning,

types of communication, types of molecule, types of chemical interaction, and

types of biological information processing.

-------------------------

3http://www.cs.bham.ac.uk/research/projects/cogaff/crp/#chap2

The chapter presented criteria for evaluating theories of what is possible and how it is possible, including theories that straddle science and metaphysics. Insisting on sharp boundaries between science and metaphysics harms both. Each can be pursued with rigour and openness to specific kinds of criticism.

A separate paper on "Using construction kits to

explain possibilities"4

includes a section entitled "Why allowing non-falsifiable theories doesn't make

science soft and mushy", and discusses the general concept of "explaining

possibilities", its importance in science, the criteria for evaluating such

explanations, and how this notion conflicts with the falsifiability requirement

for scientific theories. Further examples are in [Sloman

1996a].

The extremely ambitious Turing-inspired Meta-Morphogenesis project5

first proposed in

[Sloman 2013b],

depends on these ideas, and will be a test of their fruitfulness, in a

combination of metaphysics and science.

-------------------------

4

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/explaining-possibility.html

5 Summarised in

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/meta-morphogenesis.html

This paper, straddling science and metaphysics, asks: How is it possible for natural selection, starting on a lifeless planet, to produce billions of enormously varied organisms, living in environments of many kinds, including mathematicians able to discover and prove geometrical and topological theorems? I emphasise the biological basis of such mathematical abilities (a) because current Artificial Intelligence techniques do not seem to be capable of explaining and replicating them (and neither, as far as I can tell, can current neuroscientific theories) and (b) because those mathematical competences may appear to be esoteric peculiarities of a tiny subset of individuals, but arise out of deep features of animal and human cognition, that James Gibson came close to noticing in his work on perception of affordances in [Gibson 1979]. Connections between perception of affordances and mathematical discoveries are illustrated in various documents on this web site. E.g. see [Note 13].

Explaining how discoveries in geometry and topology are possible is not merely a problem related to a small subset of humans, since the relevant capabilities seem to be closely related to mechanisms used by other intelligent species, e.g. squirrels and nest-building birds, and also in pre-verbal human toddlers, when they discover and use what I call "toddler theorems"[Sloman 2013c]. If this is correct, human mathematical abilities have an evolutionary history that precedes humans. Understanding that history may lead to a deeper understanding of later products.

A schematic answer to how natural selection can produce such diverse results is presented in terms of construction kits: the Fundamental (physical) Construction Kit (the FCK), and a variety of "concrete", "abstract" and "hybrid" Derived Construction Kits (DCKs). that together are conjectured to explain how evolution is possible, including evolution of mathematical abilities in humans and other animals, though many details are still unknown. The FCK and its relations to DCKs are crudely depicted below in Figures FCK and DCK. Inspired by ideas in [Kant 1781], construction kits are also offered as providing Biological/Evolutionary foundations for core parts of mathematics, including mathematical structures used by evolution long before there were human mathematicians.

Note on "Making Possible": "X makes Y possible" as used here does not imply that if X does not exist then Y is impossible, only that one route to existence of Y is via X. Other things can also make Y possible, e.g., an alternative construction kit. So "makes possible" is a relation of sufficiency, not necessity. The exception is the case where X is the FCK - the Fundamental Construction Kit - since all concrete constructions must start from it (in this universe -- alternative "possible" universes based on different fundamental construction kits will not be considered here). If Y is abstract, there need not be something like the FCK from which it must be derived. The space of abstract construction kits may not have a fixed "root". However, the abstract construction kits that can be thought about by physically implemented thinkers may be constrained by a future replacement for the Church-Turing thesis, based on later versions of ideas presented here.

Although my questions about explaining possibilities arise in the overlap between philosophy and science [Sloman 1978,Ch.2], I am not aware of any philosophical work that explicitly addresses the hypotheses presented and used here, though there seem to be examples of potential overlap, e.g. [Bennett 2011, Wilson 2015, [Dennett 1995]].

There are many overlaps between my work and the work of Daniel Dennett, as well as important differences. In particular, [Dennett 1995] comes close to addressing the problems discussed here, but as far as I can tell he nowhere discusses the need for evolved specific sorts of construction kit (fundamental and derived/evolved, concrete and abstract) to account for the continual availability of new options for natural selection) or the difficulty of replicating human and animal mathematical abilities (especially geometric and topological reasoning abilities) in (current) AI systems, and therefore does not mention the need to gain clues as to the nature of those abilities by investigating relevant evolutionary and developmental trajectories, as proposed here, because of hypothesised deep connections between those mathematical abilities and more general animal abilities to perceive and reason about affordances [Gibson 1979]. (I am grateful to Susan Stepney for reminding me of the overlap with Dennett after she read an earlier version of this paper. My impression is that he would regard all the different types of construction-kit discussed here as being on the "cranes" side of his "cranes vs skyhooks" metaphor -- the other side, skyhooks, being intended to accommodate the sorts of divine intervention postulated by Dennett's opponents, with whom I also disagree.) I also think Dennett has not understood the full significance and causal powers of interactive portions of complex virtual machines, although he refers to them from time to time, often often in deprecating terms, using a spurious comparison between virtual machines and centres of gravity. But this is not the place to discuss his arguments: we are basically on the same side in several major debates.

A more detailed discussion of the idea of "making possible" is in a (draft --

Summer 2016) paper on how biological evolution made various forms of

consciousness possible ("evolutionary grounding of consciousness"):

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/grounding-consciousness.html

CONTENTS

1 A Brief History of Construction-kits

New introduction added 8 Apr 2016

Many

thinkers, including biologists, physicists, and philosophers have attempted

to explain how life as we know it could emerge on an initially lifeless planet.

One widely accepted answer, usually attributed to Charles Darwin and Alfred

Wallace is (in the words of Graham Bell(2008)):

Bell's answer:But that does not explain what makes possible the options between which selections are made, apparently available in some parts of the universe (e.g. on our planet) but not others (e.g. the centre of the sun). Earlier versions of this paper suggested that evolutionary biologists have mostly failed to notice, or have ignored, this problem. The work of Kirschner [quoted below] seems to be an exception, though it is not clear that he recognised the requirements for layered construction kits discussed here.

"Living complexity cannot be explained except through selection and does not require any other category of explanation whatsoever."

Some thinkers have tried to explain how some of the features of physics and chemistry suffice to explain some crucial feature of life. E.g. [Schrödinger 1944] gave a remarkably deep and prescient analysis showing how features of quantum physics could explain how genetic information expressed in complex molecules composed of long aperiodic sequences of atoms could make reliable biological reproduction and development possible, despite all the physical forces and disturbances affecting living matter. Without those quantum mechanisms the preservation of precise complex genetic information across many generations during reproduction, and during many processes of cell division in a developing organism would be inexplicable, especially in view of the constant thermal buffetting by surrounding molecules. (So quantum mechanisms can help with local defeat of entropy?)

Portions of matter encoding complex biological "design" information need to be copied with great accuracy during reproduction in order to preserve specific features across generations. Design specifications also need to maintain their precise structure during all the turmoil of development, growth, etc. In both cases, small variations may be tolerated and may even be useful in allowing minor tailoring of designs. (As explained in connection with parametrization of design specifications below.) However if a change produces shortness on the left side of an animal without producing corresponding shortness on the right side, the animal's locomotion and other behaviours may be seriously degraded. The use of a common specification that controls the length of each side would make the design more robust, while avoiding the rigidity of a fixed length that rules out growth of an individual or variation of size in a species.

Schrödinger's discussion of requirements for encoding of biological information seems to have anticipated some of the ideas about requirements for information-bearers later published by Shannon, and probably influenced the work that led to the discovery of the double helix structure of DNA.

But merely specifying the features of matter that support forms of life based on

matter does not answer the question: how do all the required structures come to

be assembled in a working form? It might be thought that that could be achieved

by chance encounters between smaller fragments of organisms producing larger

fragments, and sometimes producing variants "preferred" by natural selection.

(Compare the demonstration of "Droguli" by Lionel Penrose mentioned in

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/entropy-evolution.html#new-droguli)

But any engineer or architect knows that assembling complex structures requires more than just the components that appear in the finished product: all sorts of construction kits, tools, forms of scaffolding, and ancillary production processes are required. I'll summarise that by saying that biological evolution would not be possible without the use of construction kits and scaffolding mechanisms, some of which are not parts of the things they help to create. A more complete investigation would need to distinguish many sub-cases, including the kits that are and the kits that are not produced or shaped by organisms that use them.

The vast majority of organisms can neither assemble themselves simply by collecting inorganic matter or individual atoms or sub-atomic components from the environment, nor maintain themselves by doing so. Exceptions are organisms that manufacture all their nourishment from water and carbon-dioxide using photosynthesis, and the (much older) organisms that use various forms of chemosynthesis to produce useful organic molecules from inorganic molecules: [Bakermans (2015), Seckbach & Rampelotto (2015)] often found in extremely biologically hostile environments (hence the label "extremophiles"), some of which may approximate conditions before life existed.

Unlike extremophiles, most organisms, especially larger animals, need to acquire part-built fragments of matter of various sorts by consuming other organisms or their products. Proteins, fats, sugars, and vitamins are well known examples. This food is partly used as a source of accessible energy and partly as a source of important complex molecules needed for maintenance, repair, and other processes. Symbiosis provides more complex cases where each of the species involved may use the other in some way, e.g. as scaffolding or protection against predators, or as a construction mechanism, e.g. synthesising a particular kind of food harvested by the other species.

These examples show that unlike human-made construction kits, many biological construction kits are not created by their users but were previously produced by biological evolution without any influence from the users. This can change after an extended period of symbiosis or domestication, which can influence evolution of both providers and users. Compare the role of mate-selection mechanisms based on previously evolved cognitive mechanisms that later proved useful for evaluating potential mates.

Even plants that use photosynthesis to assemble energy rich carbohydrate molecules from inorganic molecules (water and carbon dioxide) generally need to grow in an environment that already has organic waste matter produced by other living things, as any gardener, farmer, or forester knows.

All this implies that an answer to our main question must not merely explain how physical structures and processes suffice to make up all living organisms and explain all their competences. The physical world must also explain the possibility of the required "construction kits", including cases where some organisms are parts of the construction kits used by others. This paper attempts to explain, at a very high level of generality, some of what the construction kits do, how they evolve partly in parallel with the organisms that require them, and how importantly different types of construction kit are involved in different evolutionary and developmental processes, including construction kits concerned with information processing. This paper takes only a small number of small steps down a very long, mostly unexplored road.

NOTE 26 Jan 2017

I am grateful to Anthony Durity for drawing my attention to the work of Kirschner who clearly recognized the gap that was ignored in the quotation by Graham Bell above. Some important steps towards providing answers seem to have been taken in M.W. Kirschner and J.C. Gerhart (2005). See also this interview with Marc Kirschner:

http://bmcbiol.biomedcentral.com/articles/10.1186/1741-7007-11-110

"So I think that to explain these developments in terms of the properties of cell and developmental systems will unify biology into a set of common principles that can be applied to different systems rather than a number of special cases that have to be learned somehow by rote." There is a useful short review here

http://www.americanscientist.org/bookshelf/pub/have-we-solved-darwins-dilemmaThis seems to be consistent with the theory of evolved construction kits being developed as part of the M-M project, but does not answer all the questions, especially my driving question about how evolution produced mathematicians like Archimedes and Euclid.

A corollary: a tree/network of evolved construction kits

Added 10 Oct 2016

A more detailed discussion will need to show how construction kits of various

sorts are located in a branching tree (or network) of kits produced and used by

biological evolution and its products.

This will add another network to the previously discussed networks of evolved designs and evolved niches (sets of requirements) [Sloman 1995]. A third network, produced in parallel with the first two will include evolved construction kits, including the fundamental construction kit and derived kits. These are unlikely to be simple tree-like networks because of the possibility of new designs, new niches and new construction kits being formed by combining previously evolved instances: so that branches merge.

Beyond supervenience to richer forms of support

This suggested relationship between living things and physical matter goes

beyond standard notions of how life (including animal minds) might

supervene on physical matter, or how life might be reduced to matter.

According to the theory of construction kits, the existence of a living organism is not fully explained in terms of how all of its structures and capabilities are built on physical structures forming its parts, and their capabilities. For organisms also depend on the previous and concurrent existence of structures and mechanisms that are essential for the biological production processes. Of course, in many cases those are parts of the organism, and in other cases parts of the organism's mother. Various types of symbiosis add complex mutual dependencies to the story.

The above ideas are elaborated below in the form of a (still incomplete and immature) theory of construction kits, including construction kits produced by biological evolution and its products. I'll present some preliminary, incomplete, ideas about types of construction kit, and their roles in the astounding creativity of biological evolution. This includes the Fundamental Construction Kit (FCK) provided by the physical universe (including chemistry), and many Derived Construction Kits (DCKs) produced by physical circumstances, evolution, development, learning, ecosystems, cultures, etc.

We also need to distinguish construction kits of different types, such as:

- concrete (physical) construction kits, all of whose parts are made of physical materials, (including chemical structures and processes),

- abstract construction kits made of abstractions that can be instantiated in different ways e.g. grammars, axiom systems,

- hybrid construction kits with both concrete and abstract components (e.g. games like tennis, reasoning mechanisms).

- meta-construction kits that can be used to construct new construction kits.

The role(s) of information in life and its evolution

Life involves information in many roles, including reproductive

information in a genome, internal information processing for controlling

internal substates, and many forms of human information processing, including

some that were not directly produced by evolution, but resulted from human

learning or creation, possibly followed by teaching, learning or imitation, all

of which require information processing capabilities. Such capabilities

require concrete (physical) construction kits that can build and manipulate

structures representing information: the combinations are hybrid construction

kits. Understanding the types of construction kit that can produce

information processing mechanisms of various types is essential for the

understanding of evolution, especially evolution of minds of many kinds. In

doing all this, evolution (blindly) made use of increasingly complex

mathematical structures. Later humans and other animals also discovered and used

some of the mathematical structures, without being aware of what they were

doing. Later still, presumably after extensions to their information processing

architectures supported additional metacognitive information processing, a

subset of humans began to reflect on and argue about such discoveries and human

mathematics was born (e.g. Euclid, and his predecessors). What they discovered

included pre-existing mathematical structures, some of which they had used

unwittingly previously. This paper explores these ideas about construction kits,

and some of their implications.

NOTES:

Examples of metacognitive mathematical or proto-mathematical

information processing can be found in

[Sloman 2015].

The word "information" is used here (and in other papers on this web site) not

in the sense of Claude Shannon (1948), but in the much

older semantic sense of the word, as used, for example, by Jane Austen.

See:

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/austen-info.html

Added 12 Mar 2016

[Kauffman 1993] seems to be directly relevant to this

project, especially as regards construction kits that build directly on

chemical mechanisms.

2 Fundamental and Derived Construction Kits (FCK, DCKs)

Natural selection alone cannot explain how evolution happens, for it must have options to select from. What sorts of mechanism can produce options that differ so much in so many ways, allowing evolution to produce microbes, fungi, oaks, elephants, octopuses, crows, new niches, ecosystems, cultures, etc.? Various sorts of construction kit, including evolved/derived construction kits, help to explain the emergence of new options.What explains the possibility of these construction kits? Ultimately, features of fundamental physics, including those emphasised in [Schrödinger 1944] and discussed below (perhaps one of the deepest examples of a scientific theory attempting to explain how something is possible). Why did it take so much longer for evolution to produce baboons than bacteria? Not merely because baboons are more complex, but also because evolution had to produce more complex construction kits, to make baboon-building possible.

What makes all of this possible is the construction kit provided by fundamental physics, the Fundamental Construction Kit (FCK) about which we still have much to learn, even if modern physics has got beyond the stage lampooned in this SMBC cartoon:

http://www.smbc-comics.com/?id=3554

Figure SMBC:Fundamental

Click the above to view the full 'comic strip',

or use this link to the image (and expand it in your browser):

http://www.smbc-comics.com/comics/20141125.png

(I am grateful to Tanya Goldhaber for the link.)

Perhaps SMBC will one day produce a similar cartoon whose dialogue ends thus:Student: "Professor, what's an intelligent machine?"

Professor: "Anything smarter than what was intelligent a generation ago."

As hinted by the cartoon, there is not yet agreement among physicists as to what exactly the FCK is, or what it can do. Perhaps important new insights into properties of the FCK will be among the long term outcomes of our attempts to show how the FCK can support all the DCKs required for developments across billions of years, and across no-one knows how many layers of complexity, to produce animals as intelligent as elephants, crows, squirrels, or even humans (or their successors).

NOTE:

29 Dec 2016

A question not discussed here is whether use of construction kits merely allowed certain designs to be achieved faster, or whether some constructions were absolutely impossible without the kits. Compare this question: Without cranes, scaffolding, and other aids to skyscraper production would it have been possible for humans to create 21st century skyscrapers (perhaps as termites ``grow'' their cathedrals)? There seem to be several reasons why the answer must be negative, including, perhaps, the impossibility of creating very tall buildings resistant to very strong winds and minor earthquakes, and containing tons of equipment as well as humans, without using separately constructed parts, including girders that are part of the structure and temporary scaffolding required during construction. As far as I know, termites do not construct major portions of their cathedrals in separate places then bring them together for assembly.

Construction-kits are the "hidden heroes" of evolution. Life as we know it requires construction kits supporting construction of machines with many capabilities, including growing many types of material, many types of mechanism, many different highly functional bodies, immune systems, digestive systems, repair mechanisms, reproductive machinery, information processing machinery (including digital, analogue and virtual machinery) and even producing mathematicians, such as Euclid and his predecessors!

A kit needs more than basic materials. If all the atoms required for making a loaf of bread could somehow be put into a container, no loaf could emerge. Not even the best bread making machine, with paddle and heater, could produce bread from atoms, since that would require atoms pre-assembled into the right amounts of flour, sugar, yeast, water, etc. Only different, separate, histories can produce the molecules and multi-molecule components, e.g. grains of yeast or flour.

Likewise, no fish, reptile, bird, or mammal could be created simply by bringing together enough atoms of all the required sorts; and no machine, not even an intelligent human designer, could assemble a functioning airliner, computer, or skyscraper directly from the required atoms. Why not, and what are the alternatives? We first state the problem of constructing very complex working machines in very general terms and indicate some of the variety of strategies produced by evolution, followed later by conjectured features of a very complex, but still incomplete, explanatory story.

2.1 Combinatorics of construction processes

Reliable construction of a living entity requires: appropriate types of matter, machines that manipulate matter, physical assembly processes, stores of energy for use during construction, and usually information e.g. about which components to assemble at each stage, how to assemble them, and how to decide in what order to do so. This requires, at every stage, at least: (i) components available for the remaining stages, (ii) information about which components, and which movements and other processes are required, (iii) mechanisms capable of assembling the components, (iv) mechanisms able to decide what should happen next, whether selection is random or information-based. Sometimes the mechanisms are part of the structure assembled, sometimes not. Some may be re-usable multi-purpose mechanisms, while others are temporary structures discarded after use, e.g. types of scaffolding, and other tools. In this context the egg-shell of a bird or reptile could be viewed as scaffolding (a temporary structure supporting safe development).The mechanisms involved in construction of an organism can be thought of as a construction kit, or collection of construction kits. Some components of the kit are parts of the organism and are used throughout the life of the mechanism, e.g. cell-assembly mechanisms used for growth and repair. Construction kits used for building information processing mechanisms may continue being used and extended long after birth as discussed in the section on epigenesis below. All of the construction kits must ultimately come from the Fundamental Construction kit (FCK) provided by physics and chemistry.

Figure 1 FCK: The Fundamental Construction Kit

Figure 2 DCK: Derived Construction Kits

The space of possible trajectories for combining basic constituents

is enormous, but routes can be shortened and search spaces shrunk by building

derived construction kits (DCKs), that are able to assemble

larger structures in fewer

steps6, as indicated in

Fig. 2.

-------------------------

6

Assembly mechanisms are

part of the organism, illustrated in a video of grass "growing itself" from seed

https://www.youtube.com/watch?v=JbiQtfr6AYk.

In mammals with a

placenta, more of the assembly process is shared between mother and offspring.

The history of technology, science, engineering and mathematics includes many transitions in which new construction kits are derived from old ones by humans. That includes the science and technology of digital computation, where new advances used an enormous variety of discoveries and inventions including these (among many others):

- Jacquard looms in which punched cards were used to control operations in complex weaving machines.

- punched cards, punched tape, and mechanical sorting devices in business data-processing;

- electronic circuits, switches, mercury delay lines, vacuum tubes, switchable magnets, and other devices;

- arrays of transistors, connected electronically;

- machine language instructions expressed as bit-patterns, initially laboriously "loaded" into electronic computers by making connections between parts of re-configurable circuits, and, in later systems, by setting banks of switches on or off;

- symbolic machine languages composed of mnemonics that are "translated" by mechanical devices into bit-patterns on punched cards or tapes that can be read into a machine to get it set up to run a program;

- compilers and assemblers that translate symbolic programs into bit patterns;

- use of operating systems: including programs that manage other programs and hardware resources;

- electronic interfaces between internal digital mechanisms and external input, output, and networking devices, along with operating system extensions and specialised software interface-handlers to deal with interactions between internal and external events and processes;

- many types of higher level programming language that are compiled to machine language or to intermediate level languages before programs start running;

- higher level programming languages that are never compiled (i.e. translated into and replaced by programs in lower level languages) but are interpreted at run time, with each interpreted instruction triggering a collection of behaviours, possibly in a highly context sensitive way.

- Network-level management systems allowing ever more complex collections of computers and other machines to be connected to support many kinds of control, collaboration, and communication.

Particular inventions were often generalised and parametrized, using mathematical abstractions, by replacing parts of a specification for a design with slots, or gaps, or "variables", that could have different "fillers" or "values". This produces patterns or abstract designs, that can be reused in new contexts by providing different specifications to fill the slots in different designs.

This is a form of discovery with a long history in mathematics, e.g. discovering a "Group" pattern in different areas of mathematics and then applying general theorems about groups to particular instantiations.

During the growth of an organism an unchanging overall design needs to accommodate systematically changing physical parts and corresponding systematically changing control features that make good use of increased size, weight, strength, reach, and speed, without, for example, losing control because larger parts have greater momentum.

Note (added 9 Apr 2016):The discovery by evolution of designs that can be parametrized and re-used in different contexts involves discovery and use of mathematical structures that can be instantiated in different ways. This is one of the main reasons for regarding evolution as a "Blind Theorem Prover". Evolution implicitly proves many such forms of mathematical generalization to be possible and useful. The historical evolutionary/developmental trajectory leading up to a particular instantiation of such a possibility constitutes an implicit proof of that possibility. Of course all that can happen without any explicit recognition that it has happened: that's why evolution can be described as a "Blind mathematician" in the same sense of "Blind" as has been used in the label "Blind watchmaker", e.g. by Richard Dawkins (echoing Paley). https://en.wikipedia.org/wiki/The_Blind_Watchmaker.

According to Wikipedia, a new born foal "will stand up and nurse within the first hour after it is born, can trot and canter within hours, and most can gallop by the next day". Contrast hunting mammals and humans, which are born less well developed and go through more varied forms of locomotion, requiring more varied forms of control and learning, during relative and absolute changes of sizes of body parts, relations between sizes, strength of muscles, perceptual competences, and predictive capabilities.

https://en.wikipedia.org/wiki/FoalControl mechanisms for a form of movement, such as crawling, walking, or running, will continually need to adapt to changes of various features in physical components (size, bone strength, muscle strength, weight, joint configuration, etc.) It is unlikely that every such physical change leads to a complete revision of control mechanisms: it is more likely that for such species evolution produced parametrized control so that parameters can change while the overall form of control persists for each form of locomotion.

If such mathematically abstract control structures have evolved within a species they could perhaps also have been re-used across different genomes for derived species that vary in size, shape, etc. but share the abstract topological design of a vertebrate. Similar comments about the need for mathematical abstractions for control apply to many actions requiring informed control, e.g. grasping, pushing, pulling, breaking, feeding, carrying offspring, etc.

Related ideas are mentioned in connection with construction kits for ontologies below.

Note added 15 Dec 2018

There are several different theories and computational models that make use of

the fact that when searching a space of designs, or solutions to problems,

instead of investigating only different combinations of the smallest building

blocks (e.g. bits in the case of a bit string), it is often much more efficient

to build new search spaces from larger building blocks that have already been

found to be useful. An example use of this idea is the theory of

Genetic Programming.

New tools, including virtual machinery

The production of new applications also frequently involved production

of new tools for building more complex applications, and new kinds of virtual

machinery, allowing problems, solutions and mechanisms to be specified in a

manner that was independent of the physical implementation details.

Natural selection did something similar on an even larger scale, with far more variety, probably discovering many obscure problems and solutions (including powerful abstraction) still unknown to us. (An educational moral: teaching only what has been found most useful can discard future routes to possible major new advances - like depleting a gene pool.)

Biological construction kits derived from the FCK can combine to form new Derived Construction Kits (DCKs), some specified in genomes, and (very much later) some discovered or designed by individuals (e.g. during epigenesis Sect. 2.3), or by groups, for example new languages. Compared with derivation from the FCK, the rough calculations above show how DCKs can enormously speed up searching for new complex entities with new properties and behaviours. See Fig. 2.

New DCKs that evolve in different species in different locations may have

overlapping functionality, based on different mechanisms: a form of

convergent evolution. E.g., mechanisms enabling elephants to learn to use

trunk, eyes, and brain to manipulate food may share features with those enabling

primates to learn to use hands, eyes, and brains to manipulate food. In both

cases, competences evolve in response to structurally similar affordances in the

environment. This extends ideas in [Gibson 1979]

to include affordances for a

species, or collection of species.7

-------------------------

7 Implications

for evolution of vision and language are discussed in

http://www.cs.bham.ac.uk/research/projects/cogaff/talks/#talk111

NOTE on vision (Added 3 Sep 2017)

There may also be closely related, partially overlapping, affordances, including

for example the affordances suited to compound eyes, with multiple lenses each

producing small amounts of of visual information, which evolved a long time

before simple eyes with a single lens projecting a rich spatially organised

information structure onto a retina with multiple receptors with different sized

receptive fields. Both types are used with extraordinary success in the species

that have them, but the requirements and opportunities for information

processing are very different.

This implies that evolution had to produce not only different construction kits for producing and assembling the physical components of the two kinds of eyes, but also corresponding construction kits for producing the two kinds of information processing system.

Moreover, each of the two kinds evolved many different specialisations. E.g. some mammals and birds have eyes with overlapping receptive fields providing opportunities for using triangulation to infer distance (stereopsis), whereas others have non-overlapping (or barely overlapping) visual fields, providing information about more of the surrounding environment.

Readers are invited to think about how the differences are relevant to different needs of hunters and prey, as well as differing needs of nest/shelter builders and animals that don't build or use nests.

Combinatorics of biological constructions.

If there are N types of basic component and a task requires an object of type O

composed of K basic components, the size of a blind exhaustive search for a

sequence of types of basic component to assemble an O is up to NK

sequences, a number that rapidly grows astronomically large as K increases. If,

instead of starting from the N types of basic component, the

construction uses M types of pre-assembled component, each containing

P basic components, then an O will require only K/P pre-assembled parts. The

search space for a route to O is reduced in size to M(K/P).

Compare assembling an essay of length 10,000 characters (a) by systematically trying elements of a set of about 30 possible characters (including punctuation and spaces) with (b) choosing from a set of 1000 useful words and phrases, of average length 50 characters. In the first case each choice has 30 options but 10,000 choices are required. In the second case there are 1000 options per choice, but far fewer stages: 200 instead of 10,000 stages. So the size of the (exhaustive) search space is reduced from 3010000, a number with 14,773 digits, to about 1000200, a number with only 602 digits: a very much smaller number. So trying only good pre-built substructures at each stage of a construction process can produce a huge reduction of the search space for solutions of a given size - though some solutions may be missed. If no useful result is found the search has to go back to "starting from fundamentals", which may take a very long time, for the reasons given.

So, learning from experience by storing useful subsequences can achieve dramatic reductions, analogous to a house designer moving from thinking about how to assemble atoms, to thinking about assembling molecules, then bricks, planks, tiles, then pre-manufactured house sections.

The reduced search space contains fewer samples from the original possibilities, but the original space is likely to have a much larger proportion of useless options. As sizes of pre-designed components increase, so does the variety of pre-designed options to choose from at each step, though far, far, fewer search steps are required for a working solution, if one exists: a very much shorter evolutionary process.

The cost of searching in the the reduced space may be exclusion of some design options. In the case of biological evolution, there is not one process of design and construction: huge numbers of evolutionary trajectories are explored at different times and locations, and occasionally natural disasters that wipe out a collection of very successful solutions may force a return to a different branch in the search space that leads to even greater successes, e.g. producing more versatile or more intelligent species, as seems to have happened after the destruction of most dinosaurs. (CHECK)

This indicates intuitively, but very crudely, how using increasingly large, already tested useful part-solutions can enormously reduce the search for viable solutions -- if they exist!

Comparison with use of Memo-functions in computing

The technique of storing previously found results of computations is familiar to

many programmers, for example in the use of "memo-functions" ("memoization") to

reduce computation time. As far as I know the idea was first published in

Michie (1968), building on work by Robin Popplestone.

Example

The Fibonacci sequence is defined as a sequence of whole numbers starting with 0 and 1, and thereafter adding the last two computed numbers to get the next number. I.e. the function fib is defined as followsDefinition of "fib(X)" fib(0) = 0 fib(1) = 1 if X > 1 then fib(X) = fib(X-2) + fib(X-1)From that you can work out that fib(2) = fib(0) + fib(1), i.e. 1.However, the number of steps required to compute the output number for each input number keeps getting bigger and bigger much faster than the input numbers do. (Try using that definition to compute fib(4), fib(5) and fib(6), giving results 3, 5, and 8, respectively, but requiring rapidly increasing numbers of computational steps.)

Readers used to programming with extendable arrays can work out how to use an array that initially contains no values, then, as each invocation of fib with a new number, e.g. N, produces a new value e.g. V, the value V can be stored as the N'th item of the array. That will make it possible to redefine fib so that after it is asked to calculate the value for any number, e.g. 20, when the largest previous value calculated was for 13, it will very quickly calculate the values for 14, 15, .. 19 and then use the last two to calculate the value for 20. The result is achieved in a tiny fraction of the time required without the extra storage.

This technique is called use of "Memo-functions", Readers who are unfamiliar with this should try using the above definition to compute fib(2), fib(3), fib(4), to get a feel for how the computation required for each new number expands, and also how much repetition is involved.