'What made it possible for evolution to produce Euclid?'

Biological Foundations For Mathematics

Aaron Sloman

http://www.cs.bham.ac.uk/~axs/

School of Computer Science, University of Birmingham

Centre for Computational Biology Seminar

Wednesday 30th November 2016 (12:00-13:00)

Seminar details

Abstract:

One of the greatest achievements of human minds was Euclid's "Elements", which

includes discoveries made by earlier mathematicians, building on ancient

mechanisms of perception and reasoning, some of them shared with

other species.

Many of the mathematial discoveries made 2,500 years ago or earlier are still in regular use by scientists, mathematicians and engineers.

How could biological evolution, starting with a cloud of dust, or a chemical soup, produce minds capable of making those discoveries in geometry, topology and arithmetic, that are still beyond the reach of the most sophisticated Artificial Intelligence systems?

It is widely believed that one aspect of human thinking that computers are best able to mimic is mathematical thinking.

But that's true only of a subset of types of mathematical thinking, e.g. using logic, arithmetic, statistics, and algebra.

There are mathematical discoveries made thousands of years ago by humans that current AI systems don't seem to be able to replicate, in particular the discoveries in topology and geometry using spatial reasoning made by Euclid and others. (I'll give examples.)

A pre-verbal human toddler seems to be able to make discoveries in 3D topology

that no current robot can make, illustrated here:

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/toddler-theorems.html#pencil

It seems that related discoveries can be made and put to practical use by non-human species and pre-verbal human toddlers, though they are not aware of what they are doing, unlike adult human mathematicians.

[NASA artist's impression of a protoplanetary disk, from WikiMedia]

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/meta-morphogenesis.html

I hope that computational biology can help to contribute to an answer to that question and many others.

I am aware that Computational Biology has two different but overlapping aspects (ignoring simply using computation for data-processing):

-

Using computers to model a wide variety of biological mechanisms and processes,

e.g. formation, transport and interactions of chemicals within organisms, or

variations of populations in ecosystems.

- Identifying and trying to understand varieties of information processing in living systems, including their evolution, reproduction, development, and interactions with their environment, inert and living.

In particular I'll focus on abilities to acquire, manipulate and use information about a spatial environment.

One of the questions I'll raise is whether current computing systems can replicate or model all the forms of information processing found in living things.

In particular:

Do some brains use chemical information processing mechanisms that cannot be replicated in digital computers?Or have researchers in AI, cognitive science and neuroscience simply not yet understood what needs to be replicated, and how to replicate it in new virtual machines running on digital computers?

Is the topic of the latest Nobel prize for chemistry relevant?

The role of mathematical mechanisms and

mathematical "discoveries" used by evolution is a strand in the Turing-inspired

Meta-morphogenesis project -- a very long term project.

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/meta-morphogenesis.html

I hope the project can benefit from related work in Computational Biology at Birmingham:

(INCOMPLETE DRAFT: Liable to change)

(Comments and criticisms welcome)

This document is

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/ccb-seminar-nov-2016.html

A partial index of discussion notes is in

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/AREADME.html

Installed: 29 Nov 2016

Updated: 1 Dec 2016

CONTENTS

(Contents list to be added, with additional pointers.)Types of foundation for mathematics

A closely related seminar (17 Nov 2016)There is considerable disagreement about the nature of mathematics.

Many think of mathematics as essentially something created by human minds -- since humans develop and organise sets of axioms, definitions, proofs, constructions and theorems.

But I claim that long before humans existed biological evolution made use of many mathematical discoveries that had nothing to do with humans.

In particular, evolution implicitly made many mathematical discoveries involving physical structures and processes

Alan Turing showed, in his 1952 morphogenesis paper that a wide variety of physical patterns can be produced on the surfaces of living things by combination of reaction and diffusion processes involving two chemicals and in recent years mathematicians and physicists and have explored a wide variety of biological and non-biological examples.

https://en.wikipedia.org/wiki/Reaction%E2%80%93diffusion_system#Two-component_reaction.E2.80.93diffusion_equations

Hence the "meta-" in "The meta-morphogenesis project".

The importance of construction kits

For more details see:http://www.cs.bham.ac.uk/research/projects/cogaff/misc/construction-kits.html

The mechanisms involved in construction of an organism can be thought of as a construction kit, or collection of construction kits. Some components of the kit are parts of the organism and are used throughout the life of the mechanism, e.g. cell-assembly mechanisms used for growth and repair. Construction kits used for building information-processing mechanisms may continue being used and extended long after birth as discussed in the section on epigenesis below. All of the construction kits must ultimately come from the Fundamental Construction kit (FCK) provided by physics and chemistry.

Figure FCK: The Fundamental Construction Kit

A crude representation of the

Fundamental

Construction Kit (FCK) (on left) and (on right) a collection of trajectories

from the FCK through the space of possible trajectories to increasingly complex

mechanisms.

The Fundamental Construction Kit (FCK) provided by the physical universe made possible all the forms of life that have so far evolved on earth, and also possible but still unrealised forms of life, in possible types of physical environment. Fig. 1 shows (crudely) how a common initial construction kit can generate many possible trajectories, in which components of the kit are assembled to produce new instances (living or non-living).

The space of possible trajectories for combining basic constituents is enormous, but routes can be shortened and search spaces shrunk by building derived construction kits (DCKs), that assemble larger structures in fewer steps7, as indicated in Fig. 2.

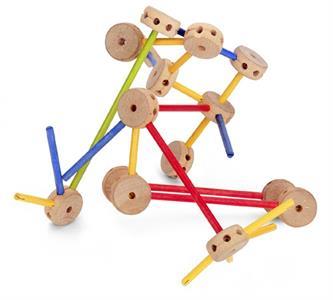

Figure DCK: Derived Construction Kits

Further transitions: a

fundamental construction kit (FCK) on left gives rise to new evolved "derived"

construction kits, such as the DCK on the right, from which new trajectories can

begin, rapidly producing new more complex designs, e.g. organisms with new

morphologies and new information-processing mechanisms. The shapes and colours

(crudely) indicate qualitative differences between components of old and new

construction kits, and related trajectories. A DCK trajectory uses larger

components and is therefore much shorter than the equivalent FCK trajectory.

The importance of layers of construction kits

Different construction kits have different mathematical properties:E.g. compare Meccano, Fischer-teknik, Lego, Tinker toys, plasticine, blocks, sand, mud, paper+scissors+glue, various electronic devices, etc.

Bringing separately developed construction kits together can produce sudden changes in what can be built and what sorts of behaviours the constructions can produce.

There are many processes that produce new developments in construction kits. A particularly important one is abstraction by parametrisation -- only possible for some sorts of construction kit.

Parameters can be simple measures or complex structures -- e.g. a perceived obstacle as a parameter for procedures for coping with obstacles, or a perceived gap in an incomplete construction to be filled by an object found in the environment.

Construction kits for information processing

As organisms become capable of more varied and more complex actions and develop a wider variety of needs -- for different kinds of food, for shelter, for mates, for care of offspring, they depend on increasingly complex and varied types of information, put to increasingly complex and varied uses, as crudely indicated in this sequence:

More complex organisms may be able to sense features of their environment and produce motion -- random motion at first, then later on directed motion (e.g. following gradients).

Later still, some organisms can alter objects in their environment in order to make tools, shelters, etc.

A whole variety of different actions in different contexts will depend on grasping spatial structures and relationships.

Many AI systems are trained to respond to perceived configurations with actions selected from a fixed repertoire.

In contrast intelligent animals can construct new types of action to solve new problems.

It is widely believed that this involves learning statistical correlations: but there is something much deeper and more powerful -- a generalisation of Gibson's view of perception.

Theories about functions of perception

- Sensory pattern recognition

- Learning responses to sensory patterns

-

Acquiring information about the contents of the environment

(exosomatic information contrasted with somatic information -- about what's happening in the body). -

Learning to think about possibilities and constraints on possibilities

Compare Gibson's theory perception provides information about affordances: possible actions by the perceiver and their consequences.This can be generalised to perceiving possibilities for change, e.g. an object can move left, move right, move up, move down, come nearer, move further, rotate in various directions, etc.

Each of the possibilities, if realised, will produce additional changes.

Gibson restricted his theory of affordances to perception of possible actions by the perceiver with good or bad consequences for the perceiver. (With some exceptions, e.g. affordances for others.)

But we can generalise this to perception of possibilities for change that are not necessarily produced by the perceiver and understanding of effects of such changes, which may or may not be relevant to the perceiver's needs.

I call these "proto affordances" - The perception of proto-affodances can lead on to understanding a wide range of possibilities and impossibilities connected with topological and geometrical properties and relationships.

- In humans and other animals this can lead to processes of reasoning about how to produce or avoid various effects via complex actions: chained affordances.

Conjecture from affordances to mathematics

I suspect that whereas many intelligent animals evolved abilities to perceive and make use of possibilities and constraints on possibilities -- humans somehow developed additional meta-cognitive abilities to notice what they are doing, form generalisations and test them.This could also lead to meta-meta-cognitive processes comparing effects in different contexts, leading to new generalisations about what does and does not work in various conditions.

The need for collaboration and the benefits of passing on acquired competences to offspring could have used additional abilities to perceive or infer knowledge states or competences of others and to take action to improve others, e.g. teaching.

Late this might have led to challenges and responses, i.e. something like construction of proofs.

Topology and Geometry vs Logic and arithmetic

Toplogical discoveries include noticing the consequences of combining two possibilites for 2-D closed curves:- Curve A completely encloses curve B

- Curve B completely encloses some object C

- Curve A completely encloses object C

Other examples

Closed 2D shapes can be formed of straight lines chained together.The smallest number of lines forming a closed space is 3: a triangle.

If three lines are connected to enclose a space there will be three corners.

By changing relative lengths of lines the shapes of the triangles including the angles at the corners can be varied.

But whatever the angles they must add up to a straight line.

The "standard" proofs of the "Triangle Sum Theorem"

Two "standard" proofs of the triangle sum theorem using parallel lines, and theEuclidean theorems COR and/or ALT stated above, are shown below in Figure Ang1:

Figure Ang1:

Warning: I have found some online proofs of theorems in Euclidean geometry with bugs

apparently due to carelessness, so it is important to check every such proof found

online. The fact that individual thinkers can check such a proof is in part of what

needs to be explained.

Mary Pardoe's proof of the Triangle Sum Theorem

Many years ago at Sussex university I was visited by a former student Mary Pardoe(nee Ensor), who had been teaching mathematics in schools. She told me that her

pupils had found the standard proof of the triangle sum theorem hard to take in and

remember, but that she had found an alternative proof, which was more memorable, and

easier for her pupils to understand.

Her proof just involves rotating a single directed line segment (or arrow, or pencil,

or ...) through each of the angles in turn at the corners of the triangle, which must

result in its ending up in its initial location pointing in the opposite direction,

without ever crossing over itself.

So the total rotation angle is equivalent to a straight line, or half rotation, i.e.

180 degrees, using the convention that a full rotation is 360 degrees.

The proof is illustrated below :

It may be best to think of the proof not as a static diagram but as a process, with

stages represented from left to right in Figure Ang3.

What kind of brain mechanism makes such spatial proofs work

Can we give computers similar powers?

---

To perceive possibilities and impossibilities/constraints

What did biological evolution have to do to give brains these powers?

Can we replicate them in digital computers?

More on construction kits

Parametrisation

There are many processes that produce new developments in construction kits. A

particularly important one is abstraction by parametrisation -- only possible

for some sorts of construction kit.

The importance of layers of construction kits

Construction kits for virtual machinery

This was not well understood a hundred years ago despite many advances in physics and chemistry.However, since about the 1950s or 1960 increasingly complex and varied virtual machines have been developed providing new types of abstract construction kit.

I conjecture that evolution discovered the importance of virtual machines long before humans did -- not least because human minds (and othher animal minds) require very sophisticated forms of virtual machinery.

2.3 Construction kits built during development (epigenesis)

Some new construction kits are products of evolution of a species and are initially shared only between a few members of the species (barring genetic abnormalities), alongside cross-species construction kits shared between species, such as those used in mechanisms of reproduction and growth in related species. Evolution also discovered the benefits of "meta-construction-kits": mechanisms that allow members of a species to build new construction kits during their own development.Examples include mechanisms for learning that are initially generic mechanisms shared across individuals, and developed by individuals on the basis of their own previously encountered learning experiences, which may be different in different environments for members of the same species. Human language learning is a striking example: things learnt at earlier stages make new things learnable that might not be learnable by an individual transferred from a different environment, part way through learning a different language. This contrast between genetically specified and individually built capabilities for learning and development was labelled a difference between "pre-configured" and "meta-configured" competences in [Chappell Sloman 2007], summarised in Fig. 3. The meta-configured competences are partly specified in the genome but those partial specifications are combined with information abstracted from individual experiences in various domains, of increasing abstraction and increasing complexity.

Mathematical development in humans seems to be a special case of growth of such meta-configured competences. Related ideas are in [Karmiloff-Smith 1992].

Figure 3: Figure EVO-DEVO:

A particular collection of construction kits specified in a genome can give rise to very different individuals in different contexts if the genome interacts with the environment in increasingly complex ways during development, allowing enormously varied developmental trajectories. Precocial species use only the downward routes on the left, producing only "preconfigured" competences. Competences of members of "altricial" species, using staggered development, may be far more varied within a species. Results of using earlier competences interact with the genome, producing "meta-configured" competences shown on the right. This is a modified version of a figure in [Chappell Sloman 2007].

Construction kits used for assembly of new organisms that start as a seed or an egg enable many different processes in which components are assembled in parallel, using abilities of the different sub-processes to constrain one another. Nobody knows the full variety of ways in which parallel construction processes can exercise mutual control in developing organisms. One implication is that there are not simple correlations between genes and organism features.

Foundations for Mathematics

There are several very different things that could be described as "foundations for mathematics".

(a) Neo-Kantian cognitive foundation

This is the kind of foundation that Immanuel Kant tried to provide by describing

features of minds that make it possible for them to understand mathematical

concepts and discover and make use of mathematical theorems and proofs. He

claimed that the knowledge obtained in this manner was non-empirical,

included

necessary truths, and was synthetic - i.e. not derivable from

definitions using only logical inferences. This three-fold characterisation

combines a theory of the nature of mathematical truths with a theory of the

features of (natural and artificial) minds that enable them to discover

mathematical truths.

I don't know whether Kant thought it possible that some kind of non-human mind could in principle exist with the mechanisms required for making mathematical discoveries. A good theory of this sort should be applicable to a variety of types of mind, including artificial minds designed and built by humans.

(b) Mathematical foundation: a mathematical foundation for mathematics

This is the kind of foundation that many mathematicians, logicians and

philosophers have attempted to find in the last two centuries or so: a

(preferably finite?) subset of mathematics from which everything else can be

derived mathematically. In this context, logic is usually regarded as a

part of mathematics.

(c) Biological/evolutionary foundations

What made it possible for mathematical discoveries to be made and used by

products of biological evolution, and later reasoned about and discussed?

(d) Physical/chemical foundations

How does the physical/chemical universe constrain the kinds of mathematics

required for its description and how does it make possible the production, by

evolution or engineering, of types of machines (including organisms) with

abilities to discover, make use of, and in some cases reason about the

mathematical features.

(e) Metaphysical/Ontological foundations?

This is an attempt to answer the question: what makes it the case that there are

mathematical truths, some or all of which can be discovered and used, whether by

human mathematicians or anything else, e.g. biological evolution or its

products.

In principle (e) could be further split into different sorts of foundation or grounding. For example, there might be a world whose physical/chemical properties could not support evolution of organisms with brains capable of making and organising mathematical discoveries of certain kinds. Could there, for example, be universes in which brains could evolve that are capable of making discoveries in geometry and topology but not the arithmetical discoveries that depend on the use of mathematical induction, or proof by contradiction?

Perhaps there are aspects of the kind of mathematics discoverable and in some cases usable in this universe that would be applicable to all possible physical universes, and some aspects of mathematics that are restricted to a subset of possible universes with special properties.

For example, could there be a kind of universe that does not support the physical mechanisms (e.g. brain mechanisms) required for discovery or invention of Euclidean geometry or its alternatives? There may be even more limited physical universes in which it is not possible for physical information processing mechanisms to exist that can support the discovery of the full set of natural numbers, even if some subsets are found to be useful. In that sort of universe no brain mechanisms could ever construct even the thought that the natural numbers "go on indefinitely". It is not obvious what sort of brain could grasp the usefulness of counting using a fixed list of counting noises in connection with a wide range of tasks, and be incapable of having the thought: there is no largest collection of objects that can be counted.

Even in our universe not all brains seem to have that capability, and it is not clear at what stage of development human children are able to comprehend such thoughts, nor how their brains need to change during development to give them such abilities.

NOTE:

David Deutsch (in Deutsch(2011)) seems to think that (c) is solved already because physics allows the implementation of universal computers and they suffice for everything.NOTE:He doesn't seem to be aware that he is talking about a kind of universality that is relative to a specified space of computations and there may be other computations that are not covered, e.g. the types of information processing used in the ancient mathematical discoveries of type (a).

It seems that brains of intelligent animals like squirrels, crows, elephants, etc. that use currently unknown types of computation lack some of the abilities required for mathematical meta-cognition. Likewise pre-verbal human toddlers.

What counts as a possible universe is not clear. E.g. the "No-space" world containing only coexisting sounds and sound sequences, considered in Strawson(1959) may be too causally impoverished to be capable of supporting information-processing mechanisms or biological evolution, in which case minds could neither exist nor evolve. Perhaps there would be numerical features and regularities in the sound patterns that do exist if such a world is possible, even if it contains no minds that could discover such phenomena. I am not inclined to take that kind of speculation seriously, though I admire other features of Strawson's book.More interestingly, physicists discuss alternative theories about the fundamental nature of the physical world, and attempt to evaluate those theories in terms of their ability to explain the observations of the physical sciences, e.g. physics, astrophysics, and chemistry. They could also be evaluated in terms of their ability to explain the possibility of the sorts of information processing mechanisms that meet the requirements of animals produced by biological evolution, including humans capable of making mathematical discoveries. This information processing requirement may turn out to add significantly to the physical requirements. An example of such a challenge is discussed in Schrödinger (1944).

I shall now elaborate on each of the three main types of foundation in a little more detail, and relate them to their roles in biological evolution.

1. Neo-Kantian cognitive foundation for mathematics

This is a modified version of Kant's attempt to describe basic features of mathematical minds. They are able to have certain sorts of experience on the basis of which they can discover and prove various kinds of mathematical truth, including truths of arithmetic, topology and geometry. Kant lived before it was possible to specify minds in computational terms, though it seems to me that he was describing requirements for such a specification and moving towards such a specification, though his version of computation, as far as I know, was not restricted to discrete computation like modern computational foundations. For example, his claim that we can discover that there are non-superimposable 3-D structures, such as a right-handed and a left-handed helix does not specify a form of computation, but this intuitive discovery does not seem to make use of discrete operations on discrete symbols. However he was not very clear about the alternatives available, and neither has anyone else been, as far as I know. However I have been trying to assemble candidate examples, e.g. http://www.cs.bham.ac.uk/research/projects/cogaff/misc/triangle-sum.html http://www.cs.bham.ac.uk/research/projects/cogaff/misc/shirt.html http://www.cs.bham.ac.uk/research/projects/cogaff/misc/trisect.html http://www.cs.bham.ac.uk/research/projects/cogaff/misc/triangles.html One important modification is a Lakatos-inspired alteration from trying to explain how it is possible know that space (or experienced space) is Euclidean to trying to explain how it is possible to know that there are at least three distinct ways of experiencing space: Euclidean, elliptical and hyperbolic (all easily illustrated in 2-D surfaces) with a common core and one axiom different, and to derive many theorems common to all, and some true only in a subset. (I suspect that if Kant had known about non-Euclidean geometries he would have modified some, but not all, of his examples.) Another requirement is to explain how a mathematical mind (like Archimedes' mind?) can discover a simple extension to Euclidean geometry, the neusis construction, that makes it easy to trisect an arbitrary triangle. http://www.cs.bham.ac.uk/research/projects/cogaff/misc/trisect.html A feature of (a) will be accommodating the evidence from Lakatos that although the mathematical discoveries are non-empirical, mistakes of various kinds are possible, and may need to be repaired.2. Mathematical foundations for mathematics

This what meta-mathematicians and some philosophers of mathematics have been seeking in the last two centuries or so, building on the work of thinkers such as Peano, Frege, Dedekind, Cantor, Russell, Brouwer (out on a limb?) and possibly others.Their goal is to discover or construct a mathematical foundation for mathematics, which may or may not be possible, namely some well-defined subset of mathematics (including logic and set-theory if needed) that suffices as a basis (in some mathematical sense) for all the rest of mathematics.

It seems that there cannot be such a finite foundation that enables any mathematical truth to be proved in a finite number of steps. If there were, all mathematical truths could be enumerated, which Cantor showed to be impossible.

An infinite foundation is trivially possible -- just combine all mathematical results into one system.

However, it is not obvious whether there can be a minimal non-finite foundation namely a part of mathematics from which everything else can be derived, but which loses that power if anything is removed from it.

In principle there could be a unique minimal foundation or there might be several alternative minimal foundations, each of which would have to be capable of proving all the others. (Compare the equivalence of Turing machines, Lambda calculus, Recursive function theory, and Post's production systems?)

3. Metaphysical/physical foundations for mathematics

[I don't know whether this is being investigated by anyone else, though Turing seemed to start on something like this shortly before he died.]This kind of foundation for mathematics would take the form of a fundamental construction kit provided by the physical universe, from which many different construction kits and forms of scaffolding can be derived which together suffice to generate not only all the mathematically specifiable structures and processes in the physical/ chemical universe, but also all the required mechanisms, including mechanisms of biological evolution with all the forms of information processing that produce, and use, new kinds of mathematical structures and processes.

[which provide re-factoring [NB Alan]],

Examples:

-- the mathematical features of quantum mechanics that Schrödinger showed in 1944 enabled long multi-stable molecules to have properties required for genetically encoded information transferable across several generations) allowing for *huge* amounts of discretely controlled variability. (Anticipating Shannon?) Schrödinger(1944)

-- the ability to grow physical structures partly under control of a genome that specifies structures with all sorts of mathematical properties, e.g. many kinds of symmetry, fibonacci sequences, various kinds of stability, various kinds of repetitive behaviours, various kinds of self-replication, etc. (as described by D'Arcy Thompson, Brian Goodwin, Stuart Kauffman, and others ...?)

-- including the ability to produce new physical/chemical construction kits with the mathematical properties required to produce a wide variety of biologically useful parametrisable properties (far more than human-designed construction kits can, e.g. meccano, lego, tinker toys, mud, plasticine, etc.!)

Things produced by new (derived) construction kits include cell membranes, intra-cell mechanisms of various sorts (eg. microtubules), skin, muscle, molecular energy stores, tendons, hairs, bone, cartilage, many kinds of woody material, capillaries, silk, eggshells, spores, feathers, digestive juices, immune mechanisms, tissue damage detection and repair mechanisms, neurones, a wide variety of sensory detectors, e.g. for light, pressure, temperature, torsion, sound, chemicals, and thousands (millions?) more useful components, many shared across species in parametrised forms because of mathematical commonalities.

[[These make use of implicit mathematical discoveries made by evolution concerning what's possible using available construction kits. New kits change what's possible in particular contexts]]

-- e.g. the physical/chemical mechanisms that encoded homeostatic control based on negative feedback loops and other mathematically useful forms of control, including some that modify feedback loops, e.g. damping mechanisms, or accelerator/decelerator mechanisms.

-- Discovery of mathematical forms that allow physical mechanisms to provide required properties with great economy, e.g. use of triangles or more complex shapes that produce rigidity.

-- various uses of parametrised design to allow for variation within an individual (e.g. during growth), across individuals in a species, and between species,

-- and which eventually produced brains of mathematicians able to make and discuss many deep mathematical discoveries (e.g. those in Euclid's Elements) using still unknown brain mechanisms, which we don't yet know how to implement/model on computers. ====

All this is partly a reaction against claims that Einstein proved Kant wrong.

It is also partly a reaction against claims that mathematics is/are created by humans, e.g. Wittgenstein: mathematics is an anthropological phenomenon.

It's the other way round: the existence of humans is a result of deep mathematical features of the universe, including increasingly many new features derived from old ones by evolution and its construction kits over billions of years (possibility theorems with existence proofs and implicit impossibility theorems -- e.g. the possibility of a Euclid-like mathematician could not have been proved by evolution within a short time after formation of the planet: far too many lemmas/intermediate theorems were needed),

I sense that all this is more consistent with what Mumford wrote than with most of what I've read about the nature of mathematics, though there are still many missing steps.

I welcome pointers to anything relevant, supporting, contradicting, or improving these ideas.

CONTENTS

REFERENCES

-

David Deutsch, 2011

The Beginning of Infinity: Explanations That Transform the World,

Allen Lane and Penguin Books, London, -

Tibor Ganti, (2003) The Principles of Life,

Eds. Eors Szathmary and James Griesemer,

Translation of the 1971 Hungarian edition, with notes, OUP, New York

Usefully summarised in http://wasdarwinwrong.com/korthof66.htm -

Erwin Schrödinger (1944)

What is life? CUP, Cambridge.

Some extracts from that book, with comments, can be found here:

http://www.cs.bham.ac.uk/research/projects/cogaff/misc/schrodinger-life.html -

Aaron Sloman, 1965,

`Necessary', `A Priori' and `Analytic',

Analysis, 26, 1, pp. 12--16,

http://www.cs.bham.ac.uk/research/projects/cogaff/62-80.html#1965-02

-

P. F. Strawson,(1959)

Individuals: An essay in descriptive metaphysics,

Methuen, London,

Maintained by

Aaron Sloman

School of Computer Science

The University of Birmingham